Parmy Olson: Anthropic's secret weapon is its cult of safety

Published in Op Eds

Silicon Valley’s most ideologically driven company may have become its most commercially dangerous.

This week’s $300 billion selloff of software and financial services stocks was apparently sparked by Anthropic PBC, and a new legal product the artificial intelligence startup had released. However pointless you may think it is to attribute market routs to a single trigger, the worry about Anthropic’s disruption puts a spotlight on its seemingly unstoppable productivity.

The company, which has roughly 2,000 employees, says it launched more than 30 products and features in January alone. On Thursday, it kept the momentum going with Claude Opus 4.6, a new model designed to handle knowledge-work tasks that will almost certainly raise the heat against legacy software-as-a-service (SaaS) companies like Salesforce and ServiceNow.

ChatGPT owner OpenAI has a workforce double the size of Anthropic, while Microsoft Corp. and Alphabet Inc.’s Google have 228,000 and 183,000 staff respectively, and boast enormous capital positions and distribution networks. Yet Anthropic’s AI tools for generating computer code and operating computers go beyond anything these larger companies have managed to launch. OpenAI and Microsoft have struggled to ship products with as much impact recently.

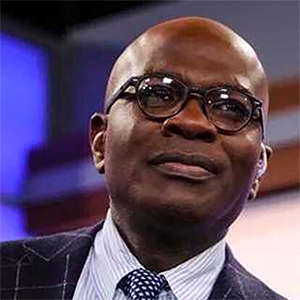

Anthropic’s ruthless efficiency comes in part from a paradoxical source: a mission-obsessed culture. It was founded by former staff at OpenAI who believed that company was too blasé about safety, specifically toward the existential risk that AI posed for humankind. The issue morphed into a kind of ideology at Anthropic, with Chief Executive Officer Dario Amodei its high priest and visionary.

Twice a month, Amodei convenes his staff for a Dario Vision Quest, or DVQ, where the bespectacled CEO will speak at length about building trustworthy AI systems that are aligned with human values, broader issues like geopolitics and the impact Anthropic’s tech will have on the labor market. Amodei warned last May that AI advancements could eliminate up to 50% of entry-level office jobs within the next one to five years, an outcome his own company seems willing to fuel thanks to its near-religious zeal for safe AI. The safety of employment doesn’t seem to factor into Anthropic’s ideals.

But people close to the company describe a cult-like atmosphere, where staff are aligned on the mission and profess to having faith in Amodei. “Ask anyone why they’re here,” one of the company’s lead engineers, Boris Cherny, told me recently. “Pull them aside and the reason they will tell you is to make AI safe. We exist to make AI safe.”

When leaders at Meta Platforms Inc. went on an expensive hiring spree last year for senior AI researchers, their approach for targeting Anthropic employees was to assure them that Meta would move away from building open-source AI systems, which are free to use and alter. Anthropic staff saw that approach as fraught with danger.

Earlier this month Amodei published a 20,000-word essay about the imminent civilizational risk that AI poses, while the company released a lengthy "constitution" for flagship system Claude, suggesting its AI might have some kind of consciousness or moral status.

That is as much a safety measure as it is philosophical handwringing, since the constitution, aimed at guiding Claude, gives the system clearer guidelines on how to process the possibility of being shut down — what it might see as death.

The company’s incessant safety focus has made its models among the most honest on the market, meaning they are less likely to hallucinate and more likely to admit to not knowing something instead, according to a ranking done by researchers at Scale.ai, which is backed by Meta. That in turn has rendered it more trustworthy to enterprise clients, who are growing in number.

Its obsession is also unusual in an industry prone to mission drift, where tech companies are founded on noble notions of improving humanity — before the obligations to investors take over. Remember Google’s “don’t be evil” motto? And OpenAI, founded to “benefit humanity” as a nonprofit unhindered by financial constraints, is another case in point.

But Anthropic’s mission-driven culture has the added bonus of eliminating the kind of internal friction that tends to slow things down at the corporate bureaucracies of Google and Microsoft, as staff work in lockstep to achieve the company’s calling. The result is that safety-obsessed Amodei, who has the harried look of a mad scientist, is shipping more products than some of the biggest names in Silicon Valley.

“Military historians often argue that the sense of fighting for a noble cause drives armies to perform better,” says Sebastian Mallaby, author of a forthcoming book on Google DeepMind and a senior fellow in international economics at the Council on Foreign Relations. He says that Anthropic’s advantage also comes from having focused on building a remarkably effective coding tool, known as Claude Code, and winning enterprise customers on that basis, as opposed to OpenAI, which has chased multiple avenues. Sam Altman’s company is suffering from “the arrogance of the front-runner,” he adds.

Anthropic is now raising $10 billion at a $350 billion valuation, which means the pressure to prioritize growth over safety will only intensify. Amodei has built a culture that ships. The question is whether that culture can hold when the stakes get higher, and as it continues to rattle markets — and potentially many jobs too. More from Bloomberg Opinion:

_____

This column reflects the personal views of the author and does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

Parmy Olson is a Bloomberg Opinion columnist covering technology. A former reporter for the Wall Street Journal and Forbes, she is author of “Supremacy: AI, ChatGPT and the Race That Will Change the World.”

_____

©2026 Bloomberg News. Visit at bloomberg.com. Distributed by Tribune Content Agency, LLC.

Comments